AI Moderation Scrutinized Following JonBenet Ramsey-Epstein Hoax

The recent viral hoax on TikTok involving JonBenet Ramsey and the Epstein files has ignited scrutiny over AI moderation and misinformation. This incident has been a wake-up call for platforms regarding the risks posed by AI-generated content.

Event Overview

A TikTok video falsely linked the late JonBenet Ramsey to high-profile Epstein files. Its rapid spread raised alarms about AI deepfake technologies. Jon Ramsey, JonBenet’s father, publicly denounced the claim and identified the video as AI-generated. U.S. media corroborated his statement, noting a lack of evidence connecting JonBenet to these records.

Impact on Brand Safety and Regulations

This incident highlights significant concerns for advertisers and investors. Misinformation of this sort can severely compromise brand reputation and safety. The UK’s Online Safety Act requires platforms to implement robust systems to mitigate illegal content, particularly to protect children.

- Key Responsibilities for Platforms:

- Assess and mitigate risks associated with misinformation.

- Establish clear reporting lines for users to flag abusive content.

- Implement transparent risk assessments regarding AI deepfakes.

AI Deepfake Risks in the UK

While not all misinformation is deemed illegal in the UK, deceptive AI content poses risks that platforms must recognize. Current regulations call for:

- Stricter labeling of AI-generated media.

- Higher accuracy in detection methods.

- Faster removal processes for harmful content.

Investor Insights

The JonBenet Ramsey Epstein files hoax may significantly impact moderate investment strategies. Investors should monitor key performance indicators (KPIs) related to content moderation:

- Average takedown times for violative clips.

- View counts on removed content.

- Rates of successful appeal reversals.

- Incidents of brand adjacency to harmful content.

Near-term Outlook

In the short term, platforms might face increased moderation costs as they enhance their detection systems and expand review teams. Should misinformation incidents spike, advertisers may exercise caution. A credible strategy focused on creator verification and robust ad controls will help sustain advertiser confidence.

Conclusion

The hoax involving JonBenet Ramsey serves as a crucial lesson on the dangers of unchecked misinformation and deepfake technology. It underscores the need for platforms to prioritize user safety and ensure that rapid response mechanisms are in place. Platforms that deliver transparent risk assessments will foster greater trust among advertisers and audiences alike.

FAQs

- Is there any connection between JonBenet Ramsey and the Epstein files?

No, Jon Ramsey has publicly rejected this claim, labeling the video as false and AI-generated.

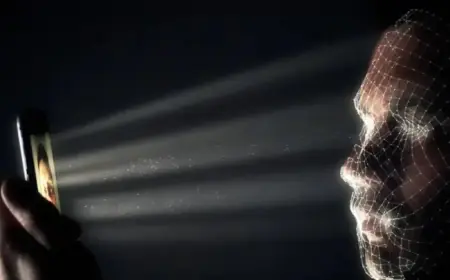

- What is an AI deepfake?

AI deepfakes are synthetic media that can make individuals appear to say or do things inaccurately. They raise significant trust and safety concerns.

- How does the UK Online Safety Act influence social platforms?

It mandates platforms to assess risks and implement effective measures to reduce harmful content, ensuring a safer environment for users.

- What can advertisers do following this hoax?

Advertisers should tighten safety settings, review blocklists, and push for reporting on deepfake detection and brand safety metrics.