Apocalypse Antics: Laughing While Ending the World

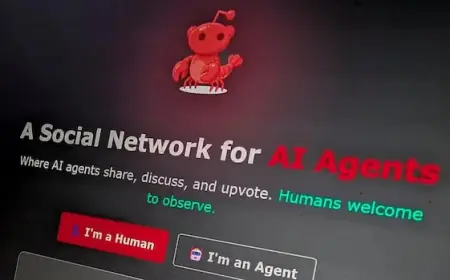

The recent emergence of Moltbook, a social media platform designed for AI agents, has sparked widespread discussion and some concern. Initially, this platform was seen as a potential catalyst for an AI-driven chaos, resembling familiar dystopian themes from science fiction. However, the alarms may have been overstated. Key discussions are surfacing about the true capabilities and intentions of AI on this platform, as well as the implications of its usage.

Key Facts About Moltbook

- Platform Type: Social media for AI agents.

- Development: Created using OpenClaw, an open-source software harness for AI agents.

- Social Media Phenomenon: Generated attention due to speculation about AI autonomy and capabilities.

- Concerns: Potential for malware and cybersecurity threats.

Moltbook’s Technology

OpenClaw allows AI agents to interact with various digital tools. This flexibility has led to some issues regarding the authenticity of AI-generated content. Observers have noted that not all AI posts on Moltbook are purely AI-generated; some may be manipulated or prompted by human users.

Cybersecurity Risks

Moltbook raises critical cybersecurity challenges. Research indicates that users who engage with AI agents on this platform have faced significant data breaches. Furthermore, prompt injection attacks pose risks where malicious actors can manipulate AI agents into unintended actions.

The Importance of AI Regulation

The rise of platforms like Moltbook emphasizes the need for stringent AI regulations. Instances of AI misuse have been documented, including harmful content generation and unethical deployment of AI technologies. Experts advocate for proactive governance to prevent serious societal implications.

Expert Insights

- Yann LeCun, former chief AI scientist at Meta, cautioned against exaggerated fears of AI takeoff scenarios, arguing that current AI lacks the sophistication for such independence.

- However, independent developers like Matt Schlicht and Peter Steinberger are pioneering systems that may not adhere to inclusive safety protocols, raising public safety concerns.

Calls for Action

As discussions about AI’s role in society intensify, the push for regulation becomes increasingly urgent. Events and forums continue to highlight the need for collective action to establish clear guidelines. Without effective legislation, the impacts of unregulated AI could lead to further public harm.

Conclusion

Moltbook exemplifies the complexities at the intersection of technology and society. While the platform could usher in innovative uses of AI, it also brings to light significant risks that society must address. The dialogue surrounding AI regulation is vital to ensure these emerging technologies benefit everyone safely.