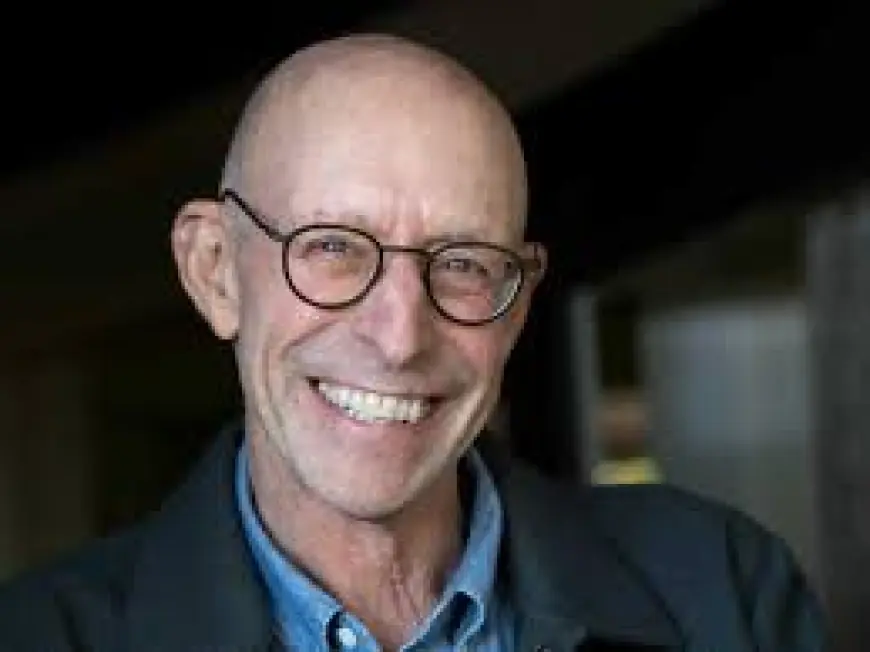

Michael Pollan — michael pollan on AI and the limits of consciousness

michael pollan said AI may 'think' but will never be conscious, a claim that sits at the center of recent coverage linking a podcast on inner voice and a first-person, magic-mushroom account searching for consciousness. The pairing highlights an active public conversation about whether machine cognition and human subjective experience are the same and why that distinction matters now.

Michael Pollan, michael pollan on AI

The statement that AI might "think" while lacking consciousness reframes debates about artificial cognition as less about functional performance and more about inner experience. That framing forces a divide between external behavior and the subjective aspect of mind, a distinction that has re-emerged across recent pieces examining thought and awareness.

Podcast explores inner voice

A recent podcast examined how scientists and philosophers studying the mind have discovered how little we know about our inner experiences. That coverage emphasized the limits of current methods for probing subjective thought, noting persistent gaps in understanding what it feels like to be aware and how private experience maps onto observable processes.

Psychedelic journey and consciousness

Alongside philosophical and scientific treatments, a personal account framed a search for consciousness through a magic-mushroom-fuelled journey. The narrative was presented as a first-person exploration rather than a clinical study, offering an experiential counterpoint to analytical approaches and reminding readers that individual reports continue to shape public ideas about what consciousness might be.

- Key takeaways: the debate separates observable thinking from private conscious experience; scientific work flags large unknowns about inner life; first-person psychedelic accounts persist as influential perspectives.

Analysis: The juxtaposition of a high-profile comment on machine thought, a podcast highlighting methodological uncertainty, and a personal psychedelic narrative underlines three converging threads. First, distinguishing cognitive competence from subjective awareness remains central to how the public and experts talk about AI. Second, empirical work on the mind still confronts foundational unknowns about private experience. Third, anecdotal reports of altered states continue to influence interpretations of consciousness even without standard scientific corroboration.

Forward look: If discussions continue to hinge on the difference between outward behavior and inner experience, public debate will likely emphasize philosophical definitions and first-person testimony as much as technical benchmarks. Should experimental tools improve for probing subjective states, the balance could shift toward empirical tests; absent that, expect the conversation to stay focused on conceptual distinctions and personal narratives. Specific claims about AI consciousness and individual psychedelic experiences remain unsettled where direct evidence of inner states is limited or not publicly confirmed.