Clawdbot Rebrands as Moltbot as Viral Personal AI Assistants Collide With Trademarks and Security Risks

Clawdbot, a fast-rising personal AI assistant that runs continuously on a user’s own machine, has been renamed Moltbot after trademark concerns raised by AI company Anthropic. The rebrand, confirmed Tuesday, Jan. 27, 2026, capped a week in which the project surged in popularity for promising something many assistants still struggle with: taking actions, not just drafting answers.

The change has not slowed interest, but it has sharpened a second storyline around always-on AI agents: when software can read messages, send emails, and act across accounts, it also becomes a high-value target for mistakes, misconfigurations, and copycat scams.

From Clawdbot to Moltbot: a trademark-triggered name change

The project’s creator, developer Peter Steinberger, said the name change was not voluntary, describing it as a response to Anthropic’s concerns about branding similarities tied to its Claude product line and related trademarks. The new name, Moltbot, leans into the lobster theme that helped the tool stand out online, and the project’s stated mission remains the same: a free, open codebase that can handle everyday tasks through a chat-style interface.

Some specifics have not been publicly clarified about the full scope of the trademark discussions beyond the request to change branding. The reason for the change has not been stated publicly in detailed legal terms.

The episode highlights a familiar tension in consumer-facing software: catchy names travel fast, and once something goes viral, brand conflicts can arrive just as quickly.

Why the tool took off: “always on” help that actually executes

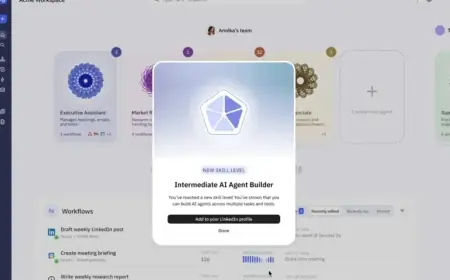

Moltbot’s appeal is straightforward. Instead of living only inside a browser tab, it is designed to run persistently in the background, ready to respond the moment a user sends a message. Users connect it to the services they already rely on, then ask it to schedule meetings, clear inbox clutter, send messages, prepare reminders, or complete other chores that normally require switching between apps and accounts.

Mechanically, the system works like a bridge between three layers: a conversation channel where the user speaks, an AI model that interprets intent, and a tool layer that can take actions like reading calendars, sending emails, filling forms, or running local commands. That last layer is what makes it feel powerful, and also what makes it risky. The more authority the tool layer has, the more damage can occur if something goes wrong.

Further specifics were not immediately available about how many people have deployed the assistant with high-privilege permissions versus safer, limited access settings.

Security concerns rise alongside the hype

As the project’s popularity spiked, security warnings began circulating about misconfigured installations. Analysts have flagged scenarios where administrative control panels or gateways could be left reachable from the public internet without strong authentication. In those cases, an outsider who finds the interface may be able to view configuration details, access stored credentials, or see conversation histories, turning a convenience tool into a master key to someone’s digital life.

The risks are not limited to exposure. Always-on agents are vulnerable to “prompt injection” style attacks, where a malicious instruction is embedded in content the agent reads, such as a message, document, or webpage. If the agent is allowed to execute tools automatically, a carefully crafted prompt can trick it into leaking secrets, changing settings, or performing unintended actions.

The popularity wave also attracted opportunistic impersonation. Security teams identified a fake add-on for a widely used code editor that presented itself as a helpful assistant but installed remote-access malware on machines as soon as the editor launched. The legitimate Clawdbot team had not released an official add-on of that type at the time, which made the impersonation easier for attackers who moved quickly to claim the name.

Key terms have not been disclosed publicly about how many people, if any, were affected by that specific impersonation attempt.

Who is affected and what comes next

Two groups feel this moment most directly: everyday users experimenting with personal automation, and developers building the ecosystem around agent-like assistants. For users, the upside is obvious—less busywork and fewer context switches. The downside is less obvious until something breaks: a single exposed token or misconfigured control panel can spill private data, and the impact can cascade across email, calendars, and connected accounts.

For developers and security teams, the stakes are higher than a typical app bug. Agentic tools compress many permissions into one place, which changes threat modeling. It also accelerates the “lookalike” problem: once a name trends, copycats can ship convincing clones, bundle malware, or ride the brand for scams, especially during rapid growth.

The next verifiable milestone will be the project’s next tagged software release and accompanying security notes, which will show whether default settings tighten further to reduce accidental exposure, and whether the team adds clearer safeguards for connecting high-value accounts.