Clawdbot Becomes Moltbot After Trademark Pressure, and the Rebrand Exposes the Dark Side of Viral AI Agents

Clawdbot, a self-hosted “AI assistant with hands” that rocketed from niche curiosity to must-try tool in a matter of weeks, is now Moltbot—after its creator said he was asked to change the name over trademark concerns tied to a lobster-themed mascot used by another AI company’s coding product. The rename, finalized in recent days and widely discussed on Wednesday and Thursday, January 28–29, 2026 (ET), didn’t just swap a label. It triggered a predictable cascade: account hijacks, scam tokens, copycat downloads, and a wave of security warnings about people running powerful agent dashboards on the open internet.

The headline is a rebrand. The real story is that consumer-grade AI agents are starting to behave like enterprise software—without enterprise-grade guardrails.

What happened: why Clawdbot had to “molt” into Moltbot

The project’s identity was built around a lobster motif and a name that sounded close enough to an existing mascot-brand pairing that it created legal risk. Rather than a prolonged dispute, the creator opted to comply and rebranded the project as Moltbot (with a matching mascot rename), framing it as “shedding a shell to grow.”

But the rebrand day also revealed how fragile a viral project’s ecosystem can be. Opportunists briefly hijacked a related social account to push crypto promotions, and the name-change moment became an attention spike that scammers could exploit faster than the community could correct.

That timing matters. When you rename a project at peak hype, you create a high-confusion window—exactly when bad actors can blend in.

Why Moltbot is blowing up: a local agent that can actually do things

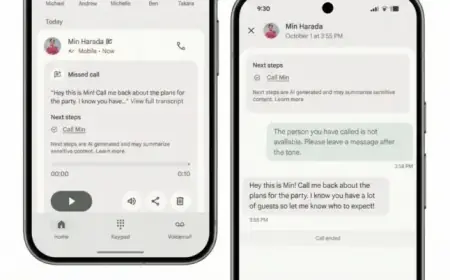

Moltbot’s appeal is simple: it runs on a user’s own machine, can keep long-term memory in local files, and can take real actions across tools rather than just chatting. It’s the “assistant” people have been promised for years, but with actual agency—automation, scheduling, file operations, web tasks, and system-level control depending on configuration.

That power is also the risk. A chatbot that answers questions is a privacy concern. An agent that can execute commands and touch accounts is a security boundary.

The security wake-up call: exposed dashboards and “keys to the kingdom” mistakes

In the last 48 hours, the most alarming theme has been misconfiguration. Security analysts have warned that many users deployed Moltbot control panels and agent gateways in ways that left them reachable from the public internet—sometimes without proper authentication, sometimes behind poorly configured reverse proxies, sometimes with sensitive tokens stored in ways that could be extracted if an attacker got access.

When an AI agent has permissions, compromise isn’t just “data leak.” It can become “account takeover,” because:

-

An exposed admin interface can reveal API keys, session tokens, or integration credentials

-

An attacker can trigger actions (send messages, modify files, run commands) under the agent’s identity

-

Prompt-injection style manipulation can coerce an agent into leaking secrets or performing unintended operations, especially if it reads untrusted content

This is why “agent security” is suddenly mainstream. People aren’t just running software. They’re running software that can act.

Behind the headline: incentives that almost guarantee chaos during virality

This episode is a case study in how incentives collide:

-

Creators optimize for onboarding speed and delight, because friction kills adoption.

-

Users optimize for “make it work,” often copying commands and opening ports without fully understanding exposure.

-

Attackers optimize for confusion and scale, and rebrands create both.

-

Trademark holders optimize for brand clarity, but enforcement can unintentionally amplify a project’s visibility—raising both legitimate interest and scam volume.

The second-order effect is bigger than Moltbot itself: it’s training the market to treat personal AI agents like high-risk infrastructure. The moment an agent can touch email, calendars, passwords, or payments, it stops being a toy.

What we still don’t know

Several missing pieces will determine whether Moltbot becomes a durable platform or a cautionary tale:

-

How many exposed instances were reachable at the peak, and how quickly users secured them

-

Whether the project will formalize security defaults (local-only binding, mandatory auth, safer pairing)

-

Whether independent audits will validate the agent’s design assumptions and threat model

-

How the ecosystem will handle third-party add-ons, which tend to be the most common supply-chain weak point

-

How governance evolves now that the name, identity, and community momentum have shifted at once

What happens next: realistic scenarios and triggers

-

Security-first defaults ship quickly

Trigger: continued reports of exposed control panels and credential leaks. -

A “safe mode” becomes the standard install path

Trigger: mainstream users adopt the tool and demand guardrails without losing functionality. -

Scam waves persist around the old name

Trigger: lingering search traffic for “Clawdbot” keeps funneling people into impersonation traps. -

Enterprises fork or wrap the project

Trigger: organizations want the capabilities but require auditing, access controls, and policy enforcement. -

A broader crackdown on unsafe agent deployments

Trigger: a high-profile incident where an exposed agent is used to access real accounts or systems.

Moltbot’s rebrand from Clawdbot is the kind of internet drama that feels small—until you realize it’s an early warning about the agent era. As software gains the ability to act on your behalf, the first question isn’t “what can it do.” It’s “what can someone else make it do if you set it up wrong.”