Microsoft Debuts AI Chip, Challenging Amazon and Google

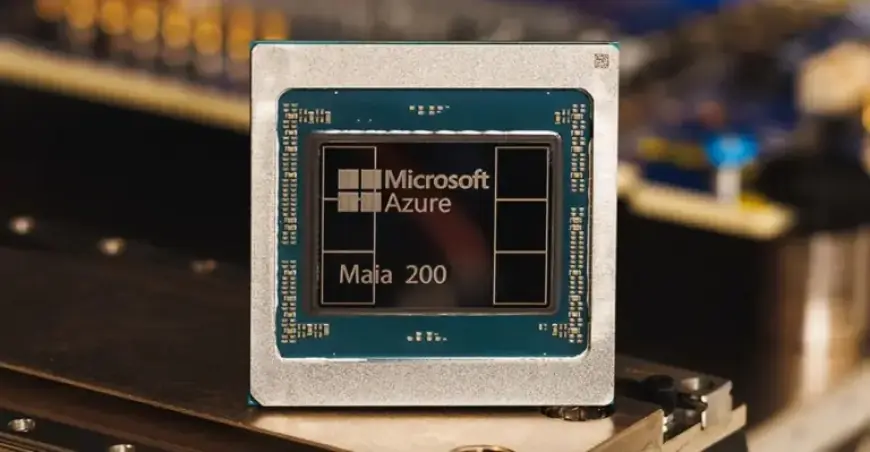

Microsoft has unveiled its latest AI chip, the Maia 200, marking a significant advancement in its in-house AI capabilities. This successor to the Maia 100 is manufactured using TSMC’s advanced 3nm process.

Key Features of the Maia 200 AI Chip

The Maia 200 is designed to enhance AI processing efficiency and performance, boasting over 100 billion transistors. According to Microsoft, it delivers:

- Three times the FP4 performance of Amazon’s third-generation Trainium.

- FP8 performance exceeding that of Google’s seventh-generation TPU.

- 30% improved performance per dollar compared to Microsoft’s existing hardware.

Scott Guthrie, executive vice president of Microsoft’s Cloud and AI division, highlighted that the Maia 200 can handle today’s largest AI models and is prepared for even more demanding tasks in the future.

Applications for the Maia 200

The Maia 200 will be instrumental in hosting OpenAI’s upcoming GPT-5.2 model, along with applications for Microsoft Foundry and Microsoft 365 Copilot. The Superintelligence team within Microsoft will be the first to leverage this powerful chip.

Collaboration and Future Development

Microsoft is proactively engaging with various stakeholders. The company is inviting academics, developers, AI labs, and contributors to open-source projects to participate in an early preview of the Maia 200 software development kit.

Deployment and Competitors

Initial deployment of the Maia 200 chips has begun at Microsoft’s Azure US Central data center, with plans for expansion to additional regions. In the competitive landscape, both Google and Amazon are developing their next-generation AI chips. Notably, Amazon is collaborating with Nvidia to integrate its upcoming Trainium4 chip with NVLink 6 and Nvidia’s MGX rack architecture.

With the launch of the Maia 200, Microsoft positions itself strongly against its rivals Amazon and Google in the AI chip sector.