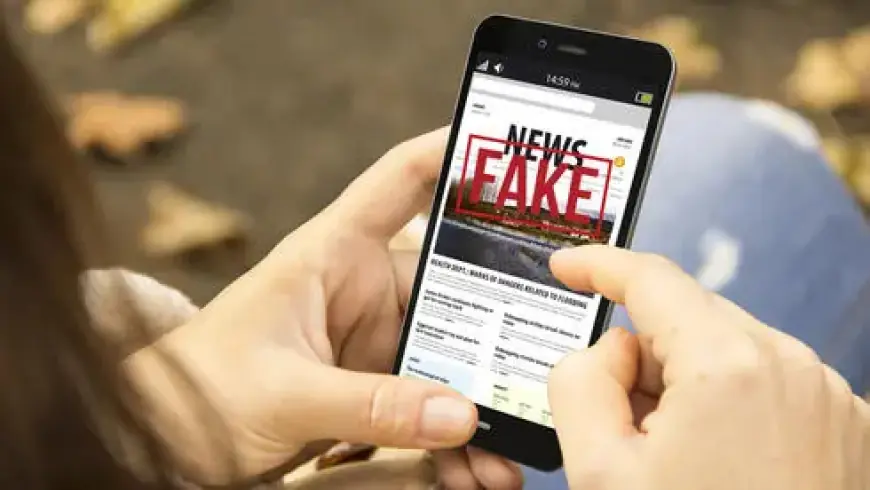

Microsoft Report: Teens Struggle to Detect Deepfakes Amid Rising AI Concerns

A recent report by Microsoft highlights alarming trends regarding teenagers’ ability to recognize deepfakes and their associated risks in an AI-driven digital landscape. This annual global study, coinciding with International Safer Internet Day, reveals that only 25% of teens can accurately identify manipulated media, a significant decline from 46% in the previous year.

Key Findings from Microsoft’s Study

The survey, conducted last summer, involved 14,797 participants across 15 countries. Its intention was to assess the digital experiences of young people in relation to rapidly advancing AI technologies.

Decline in Deepfake Detection

- Only 25% of teenagers can identify deepfake content.

- This marks a steep drop from 46% in 2022.

- 91% of respondents expressed concerns about AI-related risks.

Rise in Digital Risks

While teenagers feel more productive and connected, they also report heightened feelings of insecurity online. The study noted a significant increase in exposure to online dangers, with 64% of teens experiencing at least one type of digital risk in the past year.

- 35% experienced hate speech.

- 29% encountered online scams.

- 23% reported incidents of cyberbullying.

Increased Awareness and Protective Actions

Despite the risks, many teenagers are taking action. Seventy-two percent discussed their experiences with someone, while around 75% reported taking steps to enhance their safety, such as blocking users or deleting accounts.

Generative AI Adoption Among Teens

The use of generative AI technologies has surged among teenagers. Weekly utilization rose dramatically from 13% in 2023 to 38% in 2025. The most prevalent uses include:

- 42% for answering questions.

- 41% for planning.

- 37% for improving work efficiency.

Concerns Over AI Usage

The apprehension regarding AI remains prevalent among the youth. Key areas of concern include:

- 78% fear sexual exploitation or abuse.

- 77% worry about AI-based scams.

- 70% are concerned about privacy violations.

A notable 81% of those surveyed believe that technology companies should take stronger measures to manage harmful content online. Requested safety features include filtering explicit content and limiting messaging to known contacts.

Microsoft’s Response and Initiatives

Microsoft emphasizes the need for comprehensive approaches to digital safety, particularly in the era of AI. The company has launched the AI Futures Youth Council to gather feedback from teenagers in the U.S. and the EU on emerging technologies. Furthermore, they are collaborating with the Cyberlite organization to study how teens aged 13 to 17 engage with AI tools.

Noa Gevaon, Microsoft’s director of government relations in Israel, pointed out that effective digital safety requires a combination of policy, technology, and education. The ongoing research illustrates the importance of building resilience among young people as they navigate an increasingly complex digital landscape.