Report Reveals 100+ AI-Hallucinated Citations in NeurIPS Research Papers

Recent analysis by Canadian startup GPTZero has unveiled concerning findings regarding AI-generated errors in research papers presented at NeurIPS 2025. This prestigious AI research conference, which took place in San Diego in December 2025, hosted over 21,000 submissions. Among these, more than 50 papers reportedly contained AI-hallucinated citations that escaped the scrutiny of multiple reviewers.

AI-Hallucinated Citations Detected

GPTZero analyzed over 4,000 accepted research papers and discovered more than 100 instances of fabricated references. These hallucinations ranged from entirely invented papers and authors to subtle alterations of existing citations. Notably, some erroneous citations attributed works to non-existent authors or fabricated journal titles.

Types of Hallucinations

- Fully Fabricated Citations: Nonexistent authors and papers were entirely made up.

- Altered Real Citations: Actual papers were manipulated by changing author names or titles.

- Misleading URLs: Links that led to non-existent resources appeared in some citations.

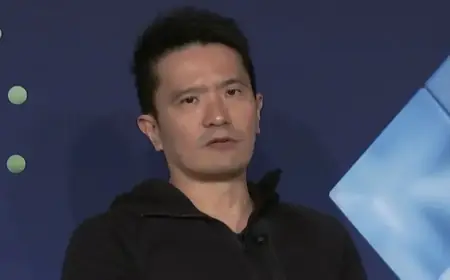

Edward Tian, cofounder and CEO of GPTZero, expressed concerns over the implications of these findings, especially since the papers affected had passed peer review. The NeurIPS conference emphasizes scientific rigor, and under traditional academic norms, any instance of fabrication is typically grounds for rejection.

Implications for AI Research and Peer Review

The NeurIPS board acknowledged the ongoing evolution of large language models (LLMs) and their impact on research submissions. They noted that in 2025, reviewers were specifically instructed to identify hallucinations during the review process. Despite findings indicating that approximately 1.1% of papers contained erroneous references due to LLM use, the board asserted that this does not inherently discredit the overall validity of the papers.

Tian indicated that errors in citations are particularly troubling given the competitive nature of AI research, where getting published is crucial for career advancement. The NeurIPS 2025 conference boasted an acceptance rate of just 24.52%, heightening concerns regarding the integrity of research.

Future Steps

To address these issues, GPTZero has developed a hallucination detection tool capable of validating citations against academic databases. This tool has been proven to have over 99% accuracy and incorporates human verification for flagged citations. GPTZero has also been enlisted by the upcoming ICLR conference in Rio de Janeiro to help review submissions for similar errors.

Challenges in Peer Review

The sheer volume of submissions at leading conferences like NeurIPS complicates the peer review process. In 2025, the main track received 21,575 valid submissions, an increase from previous years. Despite efforts to manage this influx with volunteer reviewers, the need for thorough checks remains critical to maintain research credibility.

AI-generated papers may ease the submission process, but the risks associated with flawed citations introduce significant reputational challenges for authors and the conferences that publish their work. Accurate citations are crucial for establishing the reproducibility of results in AI research, making the issue of hallucinated citations particularly urgent.