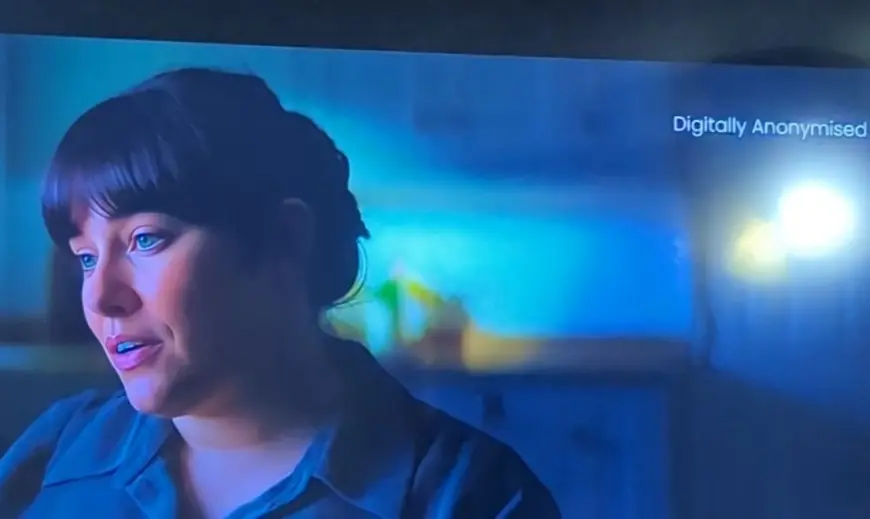

“Digitally anonymized” is everywhere—regulators are tightening what it’s allowed to mean

In privacy notices, app permissions, and data-sharing contracts, “digitally anonymized” has become a catch-all reassurance: your information was transformed so it can’t identify you. In 2026, that wording is facing sharper scrutiny as regulators and courts press a simple question—can a person still be singled out, linked, or inferred from what’s left?

The shift matters because “anonymized” data is often treated as lower-risk and more shareable than personal data. If regulators decide a dataset is only “pseudonymized” or “de-identified” rather than truly anonymous, organizations may face tighter legal duties on transparency, security, retention, and onward transfers.

What “digitally anonymized” usually signals

“Digitally anonymized” is not a single technique. It typically describes a bundle of steps intended to remove or obscure identifiers. Common examples include stripping names and account IDs, generalizing dates or locations, masking rare attributes, and aggregating results so no record stands out.

The core promise is irreversibility: no practical path to re-identify individuals, either directly or indirectly, using available means. That’s a high bar. If someone can reasonably link records back to individuals—by combining the dataset with other data, using modern analytics, or exploiting unique patterns—then the transformation may be closer to pseudonymization than anonymization.

Why “anonymous” can stop being anonymous

A growing body of work shows that re-identification risk often comes from the “ordinary” fields people assume are harmless. Three drivers keep coming up:

-

Linkability: Seemingly non-identifying attributes (age band, ZIP code, device signals, timestamps, hospital visit patterns) can match external records.

-

Singling out: Even without a name, a person can be uniquely identifiable inside a dataset because their combination of traits is rare.

-

Inference: Modern models can infer sensitive traits from patterns, even when explicit identifiers are removed.

This is why many compliance teams have moved away from one-time “scrub and ship” anonymization toward continuous risk management—testing re-identification risk, limiting data release formats, and tracking what outside data could plausibly be used to connect the dots.

New pressure from courts and regulators

Recent European developments have amplified the stakes. In September 2025, the Court of Justice of the European Union addressed when transferred pseudonymized data may be treated as personal data depending on whether the recipient can reasonably identify individuals, considering technical, organizational, and legal factors. That reasoning has pushed regulators to focus less on labels and more on practical capability.

In late 2025, European regulators organized work to revisit guidance on anonymisation and pseudonymisation, explicitly tying it to that court decision and seeking stakeholder input. The direction is clear: assessments will hinge on context—who holds the keys, what other data exists, and what a capable party could do with realistic effort.

In the United Kingdom, the data protection regulator has also emphasized clearer distinctions among anonymisation, pseudonymisation, and looser “de-identified” phrasing, alongside guidance updates that stress real-world re-identification risk rather than theoretical intent.

What “good” anonymization looks like in 2026

The strongest programs treat “digitally anonymized” as a claim that must be demonstrated, not declared. That often means:

-

Designing anonymization to a specific release context (internal analytics vs. third-party sharing vs. public release).

-

Using layered techniques (e.g., generalization plus suppression plus aggregation), not a single masking step.

-

Testing with adversarial thinking: can records be matched to known individuals, reconstructed, or inferred?

-

Documenting residual risk and the controls that keep it low (access limits, contractual restrictions, audit rights, and prohibitions on re-identification attempts).

A key point: if data remains useful at a fine-grained, record-by-record level, that can be a sign it may still be linkable—especially in small populations, niche categories, or datasets with detailed timestamps and locations.

Key takeaways for readers and organizations

-

“Digitally anonymized” is a marketing-friendly phrase, but legal and technical tests focus on practical re-identification risk.

-

Courts and regulators are increasingly using a context-based lens: who receives the data, what tools exist, and what “reasonable means” could achieve.

-

Many datasets are safer when shared as aggregates, statistics, or privacy-preserving outputs rather than raw rows.

Where this lands in 2026 is likely to be more precision, not more comfort: fewer blanket claims and more conditional statements about what was done, what risk remains, and what safeguards surround the data after it leaves the original holder.

Sources consulted: European Data Protection Board, UK Information Commissioner’s Office, Court of Justice of the European Union, Nature Digital Medicine