ChatGPT Health Personalizes Info, but Risks Loom

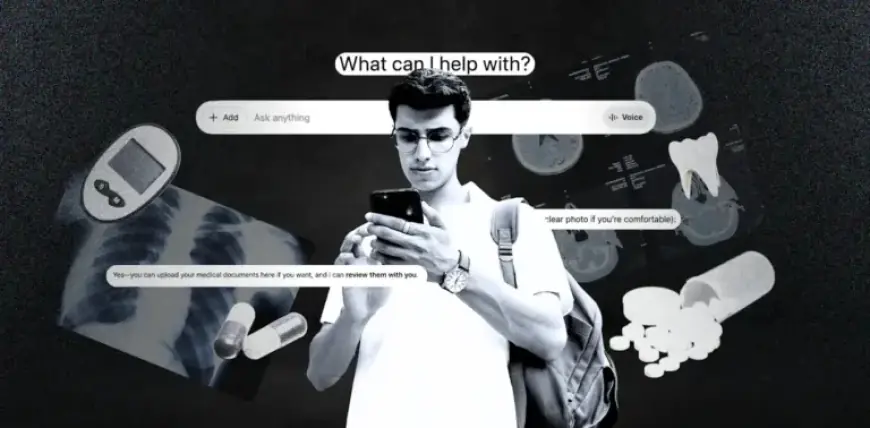

As technology continues to evolve, generative artificial intelligence (AI) tools like ChatGPT are making strides in providing personalized health advice. Recently, OpenAI launched ChatGPT Health, an AI tool designed to enhance the user experience by offering tailored responses based on individual health data. However, while the advancements are promising, they also raise significant concerns regarding safety and accuracy.

ChatGPT Health: Features and Personalization

ChatGPT Health aims to personalize user interactions by allowing individuals to connect their health records and wellness applications. Users can upload diagnostic data and utilize insights from their personal health information to receive informed guidance. OpenAI highlights that these interactions remain separate from general ChatGPT conversations, ensuring enhanced privacy and security.

OpenAI is collaborating with over 260 clinicians from more than 60 countries, including Australia, to refine and enhance the capabilities of ChatGPT Health. This collaboration is intended to improve the quality of responses provided by the AI, ideally resulting in more accurate and useful health insights.

AI Health Advice: Usage Statistics

- In a 2024 study, 46% of Australians reported using an AI tool recently.

- One in four global ChatGPT users submit health-related queries on a weekly basis.

- Approximately 10% of Australians asked ChatGPT health-related questions in the past six months.

- Among those who hadn’t used AI for health queries, 39% expressed interest in doing so soon.

Safety Concerns and Risks

Despite the enticing promises of personalized health responses, independent research indicates that generative AI tools, including ChatGPT, sometimes deliver unsafe health advice. There have been notable instances of AI providing misleading recommendations that could pose risks to users. Moreover, the accuracy of advice remains uncertain, especially since ChatGPT Health has not undergone independent testing.

OpenAI has made it clear that ChatGPT Health is not a substitute for professional medical care and is not intended for diagnoses or treatment recommendations. The lack of oversight raises questions about the tool’s compliance with Australian medical standards, particularly for vulnerable populations such as First Nations people and those with disabilities.

Best Practices for Utilizing AI Health Tools

High-Risk Health Questions

For health inquiries requiring expert clinical knowledge, seeking advice from a healthcare professional is crucial. These high-risk questions may involve:

- Understanding symptoms

- Seeking treatment recommendations

- Interpreting medical test results

Lower-Risk Health Questions

Conversely, less complicated inquiries are more suitable for AI tools. These might include:

- Learning about health conditions

- Understanding medical terms

- Preparing questions for medical appointments

Using AI should complement traditional sources of information rather than replace them entirely. In Australia, resources like 1800 MEDICARE and the healthdirect Symptom Checker provide reliable, free support for those needing immediate assistance.

The Future of AI in Healthcare

As AI health tools become staples in healthcare, there is a pressing need for clear, reliable information about their effectiveness and limitations. Ensuring that these technologies prioritize accuracy, equity, and transparency is essential for their integration into everyday health management. Empowering communities with knowledge and skills to navigate these advancements safely will be paramount in harnessing their full potential.