Study Reveals AI Agents Approaching Mathematical Limitations

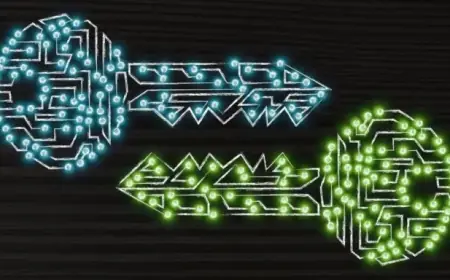

The evolution of artificial intelligence continues to raise questions about its capabilities and limitations. A recent study suggests that the potential of large language models (LLMs) may have a mathematical ceiling that hampers their growth towards full autonomy.

Study Findings on AI Limitations

Researchers Vishal Sikka and Varin Sikka have presented a compelling argument in their study published recently. The paper implies that LLMs cannot perform computational tasks beyond a specific complexity threshold. As tasks increase in complexity, the models struggle to deliver accurate results.

Key Insights from the Research

- LLMs are unable to autonomously complete complex multi-step tasks.

- The models often fail or provide incorrect outputs when faced with intricate prompts.

- This limitation challenges the notion of achieving artificial general intelligence (AGI) using current LLM technologies.

Broader Context on AI Capabilities

The findings echo previous research, including a paper from Apple, which argued that LLMs do not truly enable reasoning or cognition. Benjamin Riley, founder of Cognitive Resonance, has also suggested that LLMs will not reach a level of intelligence comparable to humans.

Reevaluating AI Industry Claims

While many AI companies promote an optimistic view of limitless potential, recent studies indicate a more realistic outlook. The research underscores a growing skepticism regarding the capabilities of these models. Several creative output evaluations have displayed unremarkable results, adding to the caution surrounding LLM expectations.

Ultimately, the research by the Sikkas contributes to a mounting database of evidence. Current AI models, including LLMs, are unlikely to match human intelligence levels within the projected time frame. The study serves as a reminder that while advancements continue, significant limitations remain in the quest for advanced artificial intelligence.